The Case For JPEG; It’s Not Just A Small Print And E-Mail Format

Many people tend to associate JPEGs with poor quality. However, when a JPEG

has poor quality, it's the result of the format being used incorrectly,

not a flaw in the format itself. Used properly, JPEG can and will produce a

file that cannot be distinguished from any other format.

The main advantage of JPEG is clearly its superior compression. An RGB image,

without any compression applied, requires 24 bits of storage for each pixel.

Using lossless formats such as TIFF and PNG, you could compress that RGB image

to about 1/2-1/3 its original size. However, using JPEG, you could compress

that same image to 1/10-1/20 its original size, with no loss of visual quality.

That means fitting 10-20 times more images on any given hard drive and on any

given memory card. These aren't arbitrary statistics, they're the

official word of the Joint Photographic Expert Group, the creators of the JPEG

format.

Of course, if you're using a camera format such as raw, there are additional

advantages in using JPEG. The JPEG format is standardized; there is no wide

variation in the software needed to handle JPEG images. The software needed

to support using raw images varies widely among camera manufacturers, and in

some cases, a single manufacturer may use more than one piece of software, depending

on the price of the camera. Also, with JPEG, you avoid the time-consuming conversion

process needed to transfer raw files into a more practical format for printing,

uploading, and storing.

We've all seen the disadvantages of JPEG when it's used improperly.

Overly, or more appropriately, incorrectly compressed JPEGs contain pixel artifacts

that clearly affect an image's quality. Also, it's important to

keep in mind that JPEG is a photographic file format. It should not be used

to compress non-photographic images, since artifacts are almost certain to appear.

|

|

|

|

|

The Print Misconception: Resolution Issues

There is a somewhat widely held misconception that JPEG either should not or

even cannot be used for prints. This actually could not be further from the

truth. JPEG can be used for prints in the same way as TIFF, PSD, PNG, and many

other file formats.

This misconception came into being from the theory that there might be artifacts

in JPEG, and that these artifacts might in some way be more noticeable in prints.

First off, it is very possible to create JPEGs without artifacts. Second, even

if there were artifacts in a JPEG, those artifacts would be less noticeable

in print than they would be on a computer monitor. The reason is simple:

Computer monitors typically use a display resolution of 72-96 pixels (or dots)

per inch. The higher resolution of the print, typically 300 dots per inch (3-4

times higher than screen resolution), squeezes the dots of ink closer together,

something that's impossible on a monitor. The result is that any artifacts

that would, in theory, be present, would be reduced to 1/3-1/4 of their original

size when printed. Again, this isn't just my opinion, it's also

the opinion of the Joint Photographic Expert Group.

The Print Misconception: Color Models

JPEG's color model has also led some to believe that it may make JPEGs

unsuitable for print. JPEG uses the YUV color model, a variation on the common

RGB color model. The concern is that the YUV color model may be incompatible

with the CMYK color model that's often used to make color adjustments

before printing.

It's understandable how this issue could have given rise to concerns over

printing. However, it's very important to keep in mind that conversion

from YUV to CMYK is a function of Photoshop, not a function of the file format.

Since Photoshop is perfectly capable of making this (and many other) color table

conversions, the issue poses no problems for JPEG.

When JPEGs Go Bad

Everyone who has ever used the JPEG format is aware of the JPEG quality setting.

Digital cameras typically enable four quality settings (such as Fair, Good,

Better, and Best), while software usually offers a sliding scale (such as Photoshop's

0-12 setting, or ImageReady's 0-100 setting). What most people aren't

aware of is that behind the scenes, another important type of compression is

going on, one that can affect your images as much as, and at times more than,

the quality setting. This additional type of compression is known as "subsampling."

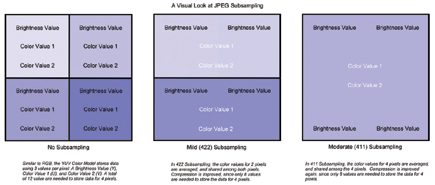

To understand subsampling, a brief look at JPEG's YUV color model is required.

YUV breaks image data down into three unique channels: Y (the luminosity or

brightness channel), and U and V (both of which are color channels). Compare

this to RGB, the color model used by computer monitors. In RGB, each of the

channels carry both brightness and color data. You can't create 100 percent

brightness (white) without setting all three color channels to maximum. You

also can't get 0 percent brightness (black) without setting all three

color channels to zero. In RGB, color and brightness are inextricably connected.

In YUV, brightness and color are handled separately.

Managing image data in this way gives JPEG a unique capability: since the human

eye is more sensitive to changes in luminosity/brightness than it is to changes

in color, additional compression can be applied to the color channels, sometimes

without affecting the overall quality of the image. Of course, the keyword here

is "sometimes." You can't always accurately predict when subsampling

will and will not affect an image's visual quality. For this reason, it's

important to always avoid subsampling when snapping a photo.

That may sound easy enough, until you ask the following question: "Exactly

how do I avoid subsampling?" Most camera manufacturers consider the exact

details of their JPEG compression options to be a proprietary secret. Although

they'll tell you that subsampling is turned on at some point and turned

off at some other point, they won't tell you which settings (such as Fair,

Good, Better, or Best) have it turned on, and which settings have it turned

off. So, the only option is to use the highest quality setting available.

|

|

|

You may have already suspected that the highest quality setting was the best

option, and subsampling explains why this is the case. If camera manufacturers

would tell us a little more about how their (very subjectively) named settings

such work, maybe it would be possible to use "Better" instead of

"Best," and fit even more images in any given storage space. However,

without this information, using the best available setting is always the best

option.