How Autofocus Works: The Story Behind This Invaluable Tech Once Considered a “Gimmick”

When, in 1985, autofocus first made its appearance in a popular SLR, the Minolta Maxxum 7000, I figured it was a gimmick. Hey, I’m a Homo sapien, with an opposable thumb that allows me to focus a lens. To me, any battery-burning technology to take over this task was about as useful as a robot finger to punch the shutter.

Leica had developed autofocus in the 1970s and apparently felt the same way. They reckoned that anyone who could afford one of their cameras knew how to turn the focus ring. So, they sold the technology to Minolta.

Well, I’ve undergone an attitude adjustment. I use autofocus for maybe 70% of my shots, allowing me to shift more of my neural activity to composition.

Sure, there are situations where manual focus is better, just as there are circumstances when you want to choose manual color balance. But autofocus is a genuinely good thing, and you’ll be more interesting at social events if you know how it works.

In my naive youth, I figured autofocus functioned by actually measuring distances, either by bouncing radio waves or infrared light off the subject. Some cameras have actually gone this route, but there are all sorts of problems with an “active” autofocus scheme. For instance, glass isn’t very transparent to infrared, which means that if you tried to focus on something through a window, the result would be a sharp window, but everything else would be blurred. Quite a pane.

Instead, nearly all cameras today use passive focusing schemes; either “phase detection,” “contrast detection,” or both. These technologies keep their hands to themselves—no invisible beams reach out to touch anyone—everything’s done in camera.

Consider contrast detection. If you aim your camera at zebras, but the lens is out of focus, the stripes will be smeared and the African equids will look kind of gray. By turning the focus ring, you can resolve the stripes, and in doing so, will increase contrast in the image (which now has both jet-black and lily-white stripes). Contrast detection automates this process by examining adjacent pixels on your sensor, and mechanically twists the focus ring back and forth to maximize the contrast.

It works, but it’s slow. So, the preferred technology these days is phase detection, which doesn’t actually measure any phases but uses the same focusing principle as a rangefinder camera. Old-timers will remember that before the advent of single-lens reflexes, their cameras had essentially a second viewfinder (really, just an additional window) that was about three inches displaced horizontally from the main viewfinder. When light from both finders was combined, the photographer saw a double image. By twisting the focus ring until these images overlapped, the user dialed in the distance.

That worked great, at least for objects that weren’t featureless and extended horizontally (e.g., a white 18-wheeler). And it was fast. For years, there was an ongoing argument between the rangefinder crowd and the SLR folks. The rangefinders were the only way to go if you needed to be nimble.

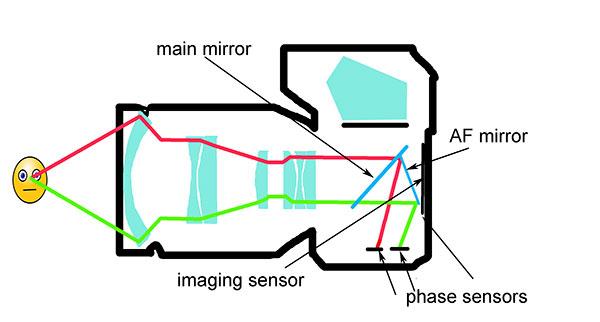

Today, phase-detection autofocus has brought the double-image approach into the digital age, and even improved it. This is usually done by having a second mirror in the camera behind the main mirror. Because the principal mirror is slightly transparent, some of the light coming from opposite sides of the lens passes through, and is deflected by the second mirror to small sensors near the bottom of the DSLR.

These sensors register double images, just like the old rangefinders, and adjust the lens focus until they converge. Modern cameras allow you to choose where you want focus to be sharp, and are sensitive to displacements that can be horizontal, vertical, or even diagonal.

While phase detection is the number one choice in DSLRs, many cameras switch to contrast detection in direct view, when the mirror is up.

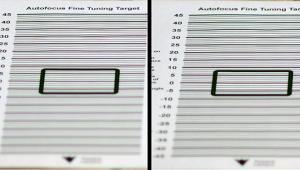

Phase detection involves the equivalent of a second camera within a camera, and this can lead to problems if the optical path to the imaging sensor and the phase-detection sensor aren’t identical. Many cameras have the built-in capability to adjust this. In case you can’t figure that out on your own, be warned: we’ll take it up next time.

Seth Shostak is an astronomer at the SETI Institute who thinks photography is one of humanity’s greatest inventions. His photos have been used in countless magazines and newspapers, and he occasionally tries to impress folks by noting that he built his first darkroom at age 11. You can find him on both Facebook and Twitter.